Freehand Interaction

Real world physics

Full Hand Occlusion

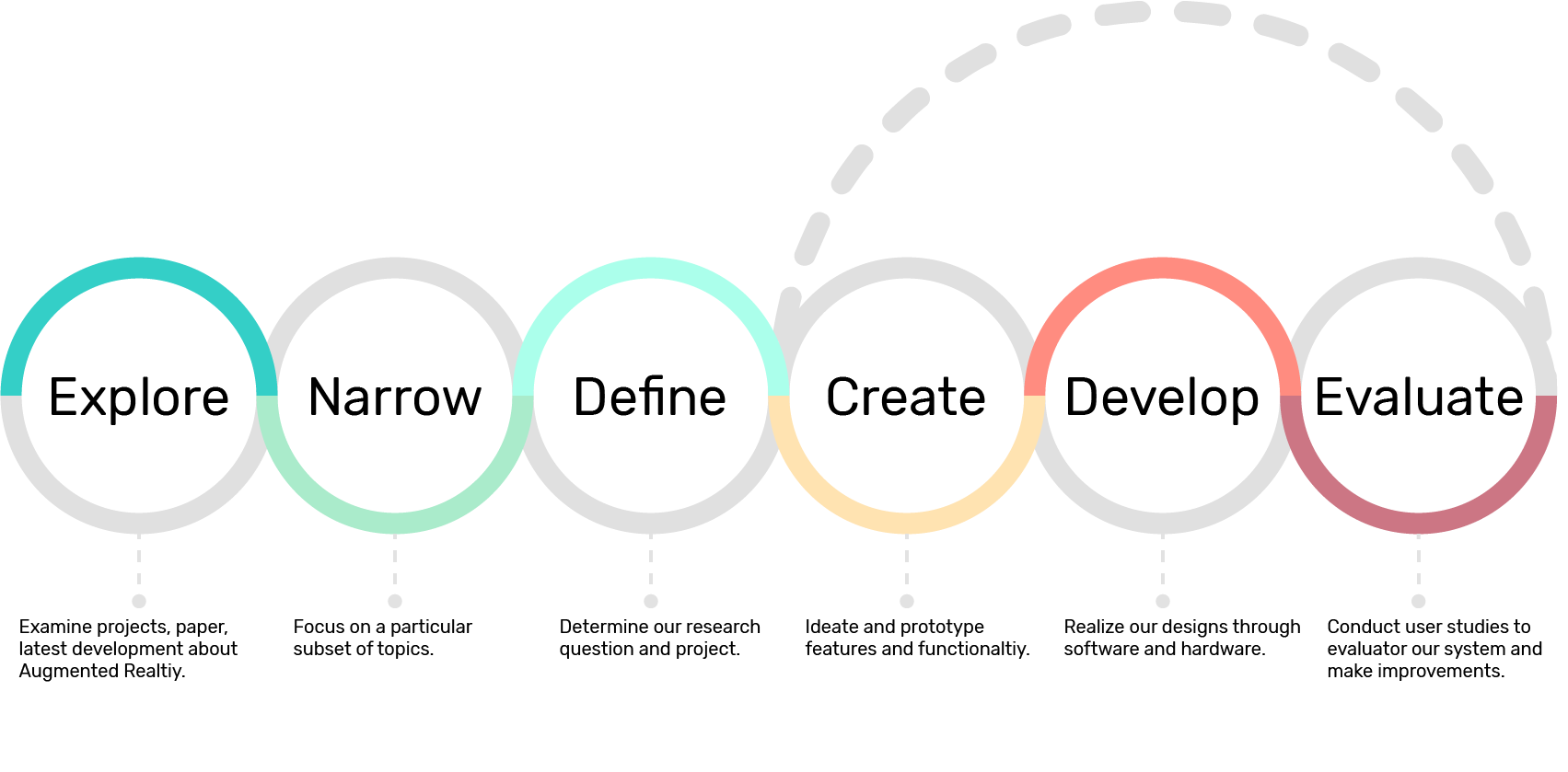

Smartphone augmented reality (AR) lets users interact with physical and virtual spaces simultaneously. With 3D hand tracking, smartphones become apparatus to grab and move virtual objects directly. Based on design considerations for interaction, mobility, and object appearance and physics, we implemented a prototype for portable 3D hand tracking using a smartphone, a Leap Motion controller, and a computation unit. Following an experience prototyping procedure, 12 researchers used the prototype to help explore usability issues and define the design space. We identified issues in perception (moving to the object,reaching for the object), manipulation (successfully grabbing and orienting the object), and behavioral understanding (knowing how to use the smartphone as a viewport).

To overcome these issues, we designed object-based feedback and accommodation mechanisms and studied their perceptual and behavioral effects via two tasks: picking up distant objects, and assembling a virtual house from blocks. Our mechanisms enabled significantly faster and more successful user interaction than the initial prototype in picking up and manipulating stationary and moving objects, with a lower cognitive load and greater user preference. The resulting system - Portal-ble - improves user intuition and aids free-hand interactions in mobile situations.

Freehand Interaction

Real world physics

Full Hand Occlusion

To evaluate the experience of free-hand interactions in smartphone AR, we developed an initial prototype system. Its design and construction was informed by the latest literature on interactions in AR. For hardware, we used a Samsung S9+ Android smartphone and a Leap Motion hand-tracking controller, and we developed our AR software system with Google ARCore and Unity SDK. System Setup section described in detail the final hardware and software system we used for Portal-ble

To identify critical usability issues in free-hand AR interactions, we employed a methodology called experience prototyping, which exposes issues in usability by recruiting users to interact with our initial prototype (more detailed is covered in the next section). By using this method, we discovered four main usability issues, all of which greatly informed and guided the final design and implementation of Portal-ble. We evaluated our system by conducting a user study with 12 participants. The result of the user study showed that Portal-ble has effectively alleviated or resolved the usability issues.

24 participants were recruited to complete two types of tasks - one content manipulation task and one content creation task - with our initial smartphone prototype. Content manipulation means to interact with virtual objects via means like selection, rotation, or translation. Some examples of the tasks designed are throwing darts, stacking cubes, and picking up lamps. Content creation refers to draw in mid-air directly using hands. Participants might be asked to draw cubes, write their names, or draw lines across a room.

Each session with the participants are video-recorded and participants were asked to think aloud during the tasks. Interviews were conducted after the sessions to specifically gather information about their experiences and problems encountered. The usability issues we identified are discussed in the Findings section.

We analyzed data and information collected from our experience prototyping sessions, and categorized the major usability issues into the four listed below. The title lines of the issues are directly quoted from the aforementioned UIST paper.

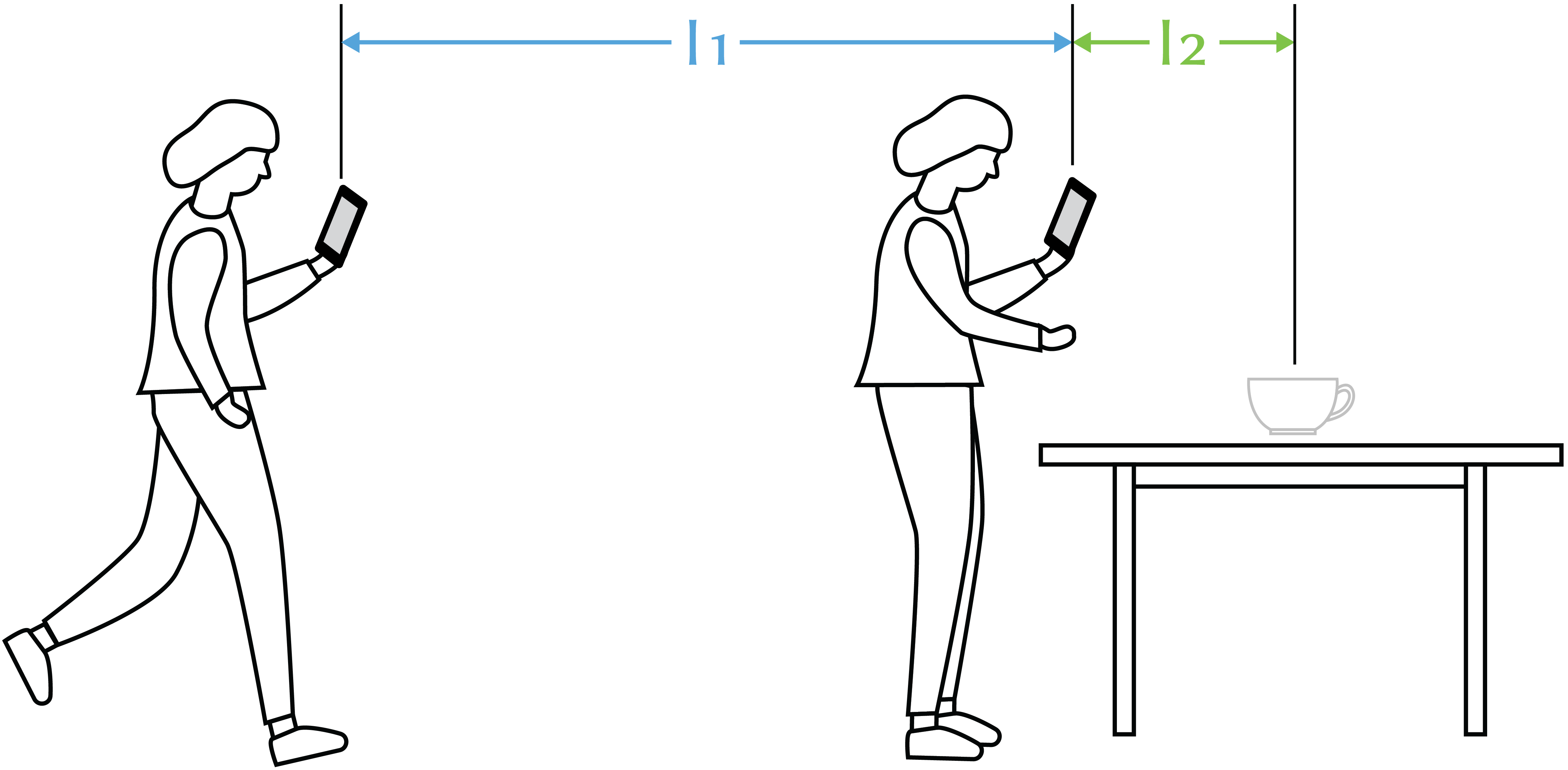

Issue 1 (I1): "Participants hesitated when approaching distant virtual objects."

Participants found it to be challenging to estimate exactly how far away virtual objects were from them, although they could walk in the general direction towards the objects.

Issue 2 (I2): "Participants were uncertain how to map the spatial distance to virtual objects."

Participants did not know how far they should stretch their arms and hands out to interact with virtual objects, although they were within the participants’ reach.

Issue 3 (I3): "Participants experienced difficulties picking up and releasing virtual objects, aggravated by unintentional dropping."

Some participants reported that sometimes virtual objects they were grabbing would drop from their hands, although they had no intentions to do so. Others had difficulties picking up virtual objects.

Issue 4 (I4): "Participants lack a general mental model for free-hand direct manipulation on the smartphone."

We observed that various strategies were used by participants to figure out how to manipulate virtual objects with their hands. In order to find the right distance to pick up a virtual object, some moved their hands around while keeping the smartphone still, while some others simultaneously adjusted their hand and smartphone positions.

We further categorized I1 and I2 as perception issues, I3 as manipulation issues, and I4 as behavior issues. We developed Portal-ble feedback mechanisms to mainly address I1 and I2. Solutions to all issues are illustrated in the Solutions section.

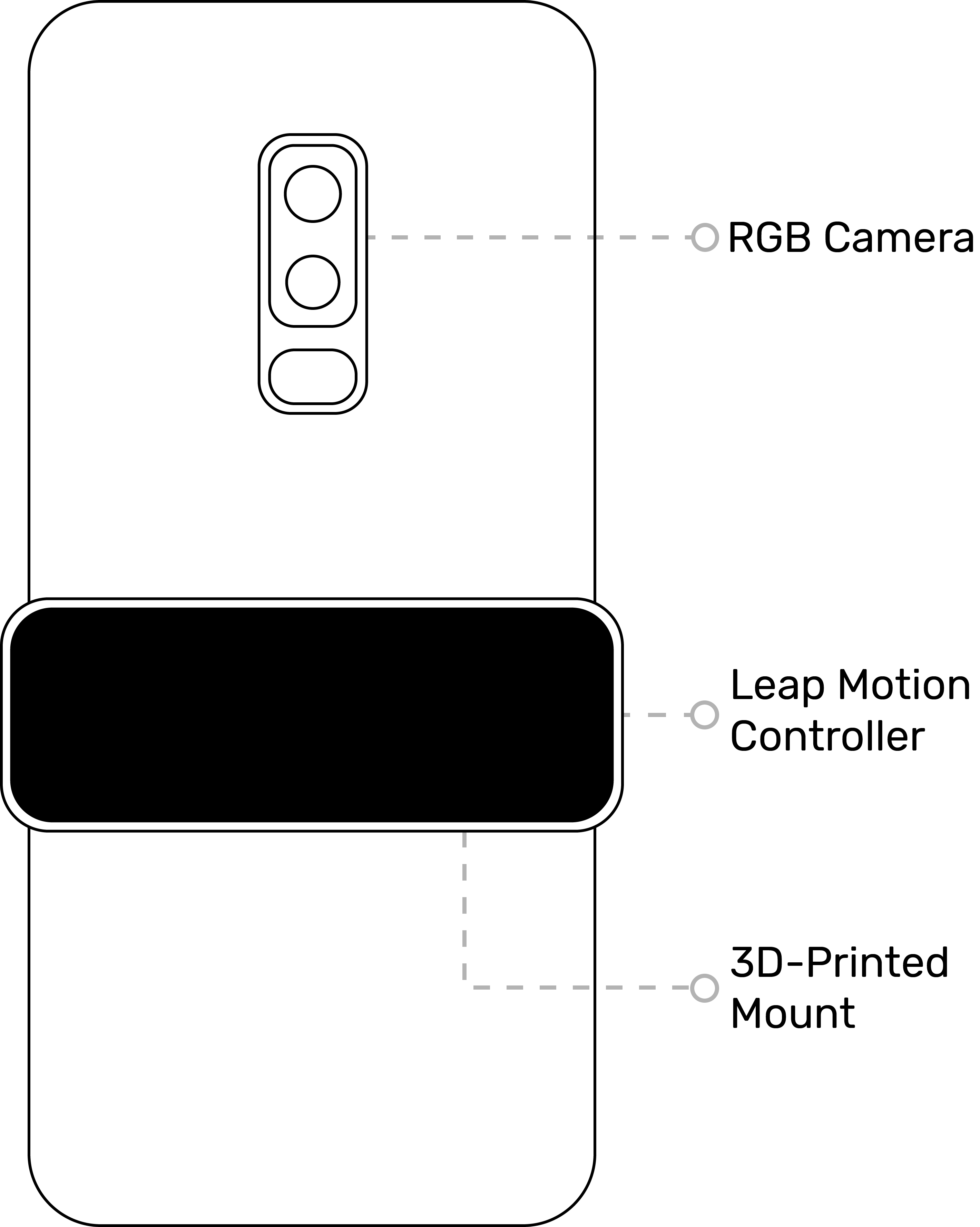

Portal-ble consists of a set of hardware and an open-source software.

The main hardware component is a Samsung S9+ Android phone equipped with a dual-lens RGB camera. To track users’ hand movements, a Leap Motion controller is attached to the smartphone’s back via a customized 3D-printed mount with suction cups.

The AR interaction logic is developed using Unity SDK and Google’s ARCore SDK. In order to sync hand data tracked by Leap Motion to Unity and ARCore, we used an external portable computation unity (a computer stick + a power bank) to relay the data to the smartphone wirelessly.

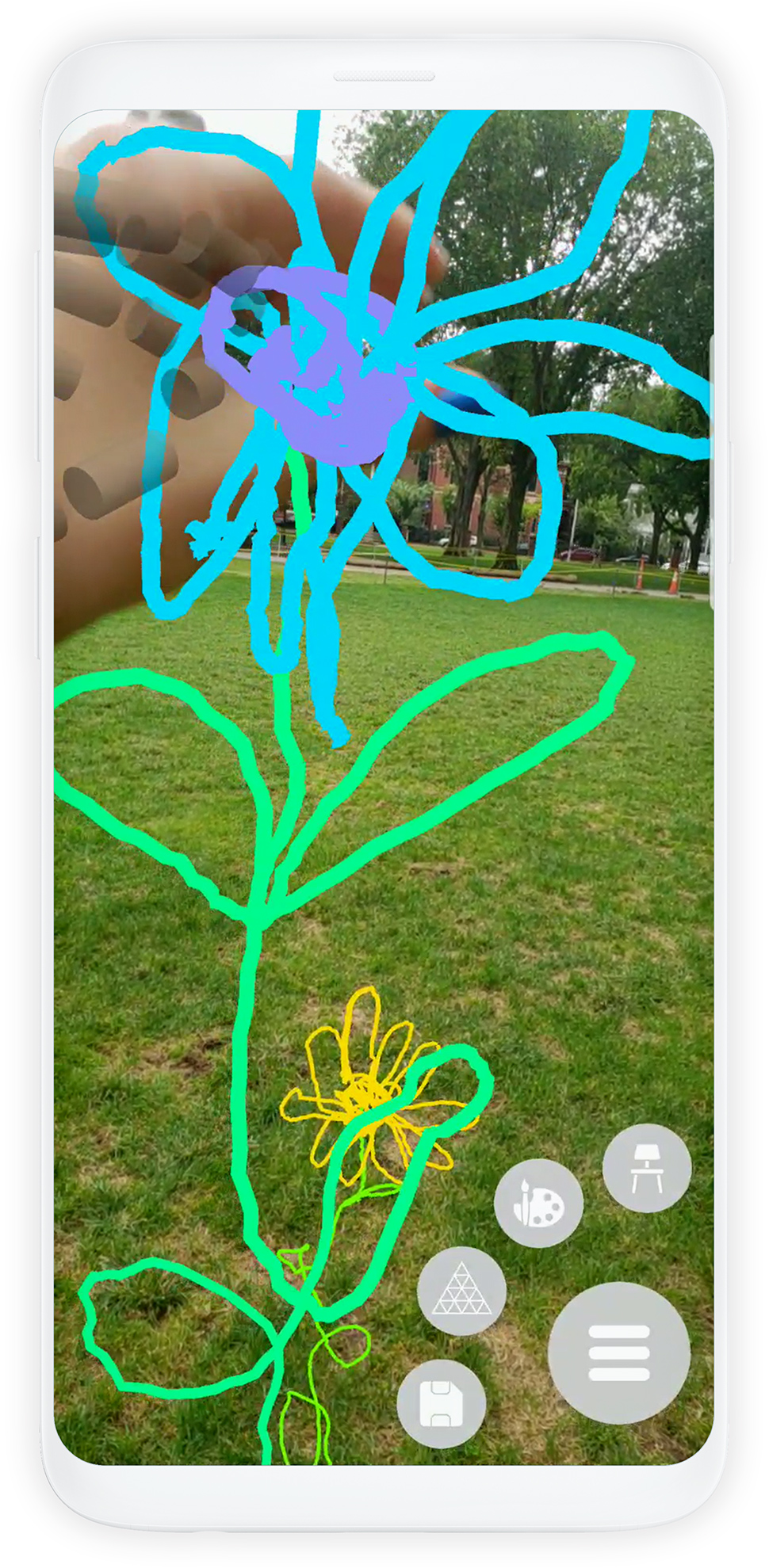

Inspired by Google's material design, I placed a floating action button located in the lower left corner to serve as the entry point to all non-free-hand interactions. Making traditional 2D UI exists minimally in Portal-ble also contributes to the overall immersive experience. When pressed, the floating action button expands to a full menu with functionality options. When switched to 3D painting mode, a painting toolbar appears on the side.

Floating Action Button

Expanded Menu

3D Painting Toolbar

We describe in detail here the feedback mechanisms and accommodations that we designed and implemented to address the usability issues listed in Findings. Accommodations that are more related to algorithms are omitted here (solutions to Issue 3 and 4). Please refer to the paper for more technical information.

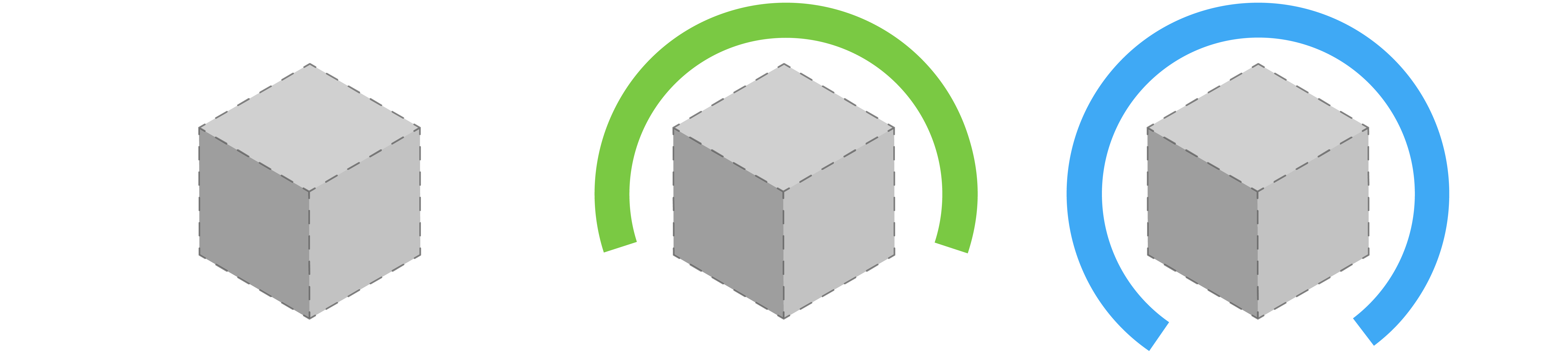

To address I1, we designed a two-stage visual feedback mechanism and named it progress wheel. For each virtual object in the AR world, a progress wheel appears around the object and fills itself as the user approaches that object. The wheel is colored green when the object is beyond the user’s reach, and will turn blue once the object is within reachable distance of the user (we set it to be 75cm, the average length of a human arm), indicating to the user that the object is now within interactable range.

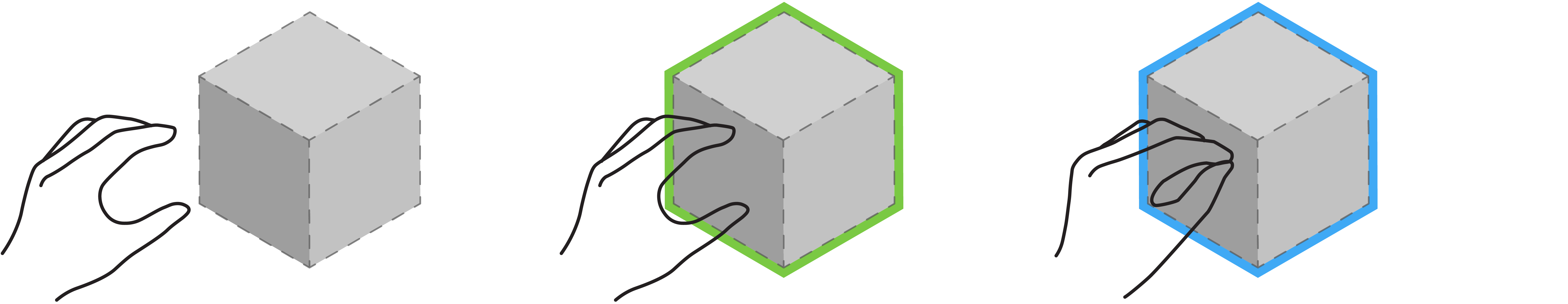

Addressing I2, We created a visual signal to inform users the changes in a virtual object’s interaction states. When an object is contacted by users’ hands, a thick green contour line appears around the object to signify that the object is ready to be interacted with. Once users successfully grab the object, the contour line turns blue to indicate the change in interaction state.

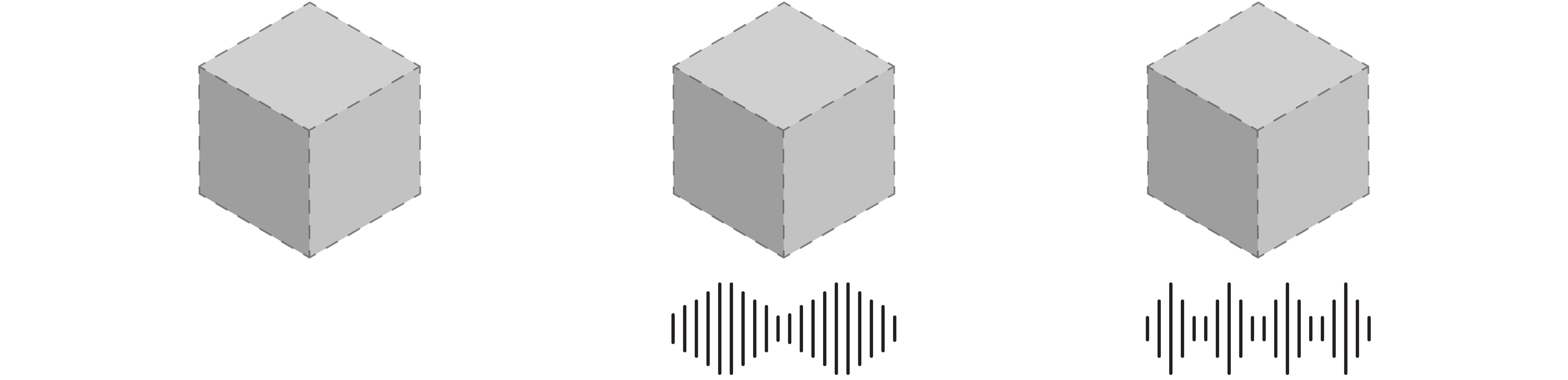

Besides visual cues, audio cues can also aid users in estimating physical distances between them and the virtual objects. Our sound feedback mechanism plays at higher and higher tempo as a user approaches an object, indicating that the user is moving closer and closer to that object. Only one object at a time can emit sound, for having multiple objects generating sound at the same time causes confusion. The sound-emitting object is chosen based a cylindrical projection casted from the AR camera through the center of a user’s palm (refer to the paper for more technical details).

Similar to highlight, a short vibration (25ms) from the smartphone is introduced to signal changes in interactions states. When a user successfully grab a virtual object, the phone vibrates to confirm the change to grabbing state. A haptic feedback is also triggered when a virtual object collides with another virtual object, mimicking real-world physics.

Below are some practical use cases of Portal-ble.

By addressing the usability issues with feedback mechanisms presented above, Portal-ble aims to improve the overall intuitiveness and user experience of free-hand interactions in smartphone AR. To evaluate this, we designed and conducted a user study to understand the efficacy of Portal-ble.

We used our initial smartphone AR prototype without any feedback or accommodation systems as a baseline, and compared how fast and successfully the participants could perform designed tasks using Portal-ble and the baseline. We tested the following research hypothesis:

"Portal-ble will improve user depth perception and success in grabbing and manipulating virtual objects, and reduce user cognitive effort." Originally 2 tasks were designed and conducted in the user study, but only one is presented here for the sake of length. Please refer to our paper for full details.

Task: Approaching and Grabbing Virtual Objects

In this task, participants were asked to move towards and pick up a distant virtual object placed on a small table. In most AR studies, virtual objects would stay stationary, but it became more challenging when objects started to move. Thus, to extensively test the efficacy of Portal-ble, we further divided this task into two subtasks: picking up a stationary object (Task 1.1) and picking a randomly moving object (Task 1.2).

Completing the task involves the two perception issues mentioned in Findings: moving towards the target object (I1) and grabbing the target object (I2). Participants were assigned to use pairs of feedback mechanisms presented above instead of isolated ones. Specifically, the pairs were formed from these four feedback conditions: progress wheel for I1, sound for I1, haptics for I2, and highlight for I2. Additionally, the baseline condition and a physical condition in which participants picked up a physical object were tested.

| Task | Condition | Number of Trials |

|---|---|---|

| Approaching and Grabbing | Progress Wheel | 3 (×2) |

| Sound | 3 (×2) | |

| Haptics | 3 (×2) | |

| Highlight | 3 (×2) | |

| Basline | 3 (×2) | |

| Physical | 3 |

For metrics, we collected completion time and success rate for each trial within the task. Completion time were recorded via an on-screen timestamp button. In addition, we used the NASA Task Load Index (NASA-TLX) assessment tool to measure cognitive ratings.

We recruited twelve participants (4 male and 8 female) ranging from 19-28 years old for the study. Eight of the participants had previously used smartphone AR systems (e.g. Pokemon Go), but none had any experience with free-hand AR interactions on smartphones.

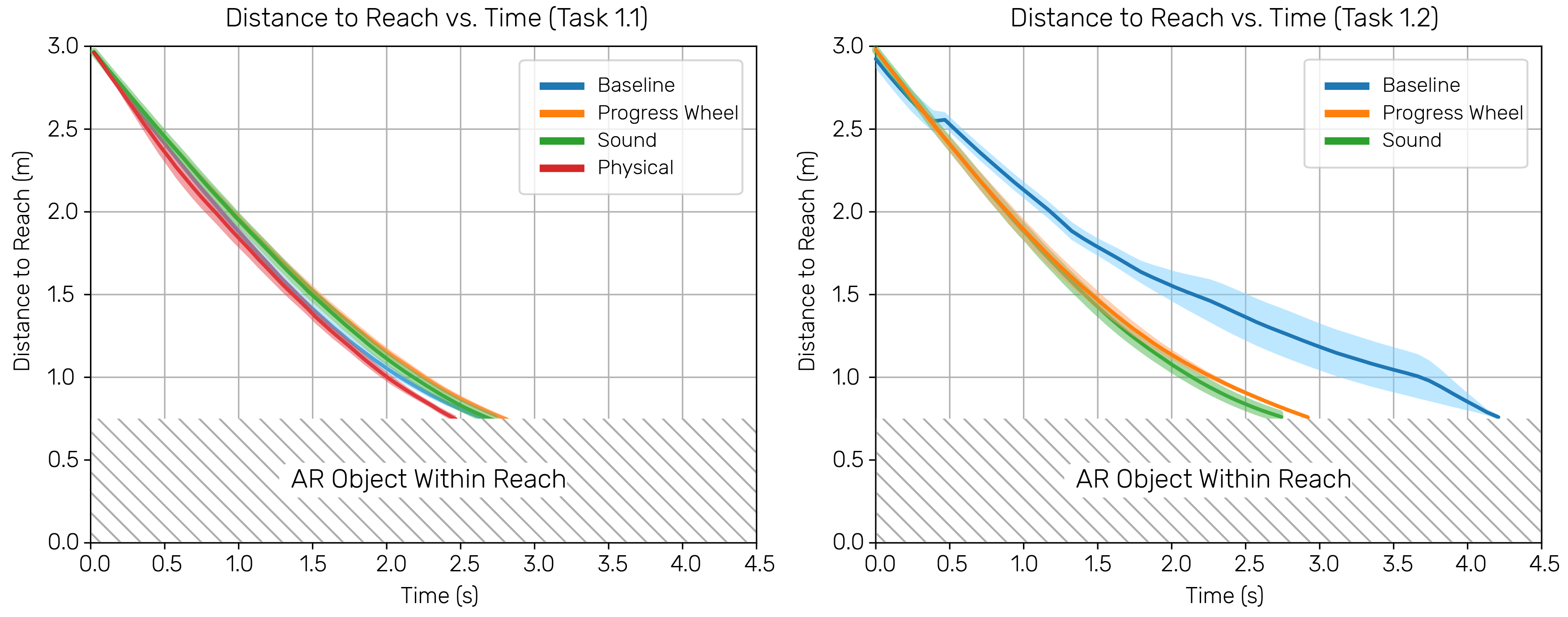

A total of 360 trails were collected from the twelve participants. I wrote a Python script to extract the distance data (distance between a participant and the target object) of all participants under different conditions and plotted the average distance to reach over time. The resulting graph is shown below.

The color-shaded area around each curve represents standard error. For the simpler task of 1.1, participants did not reach target objects any faster than the baseline. However, for the harder Task 1.2 (target object is moving), participants reached the target objects significantly faster with the aid of Portal-ble’s feedback mechanisms.

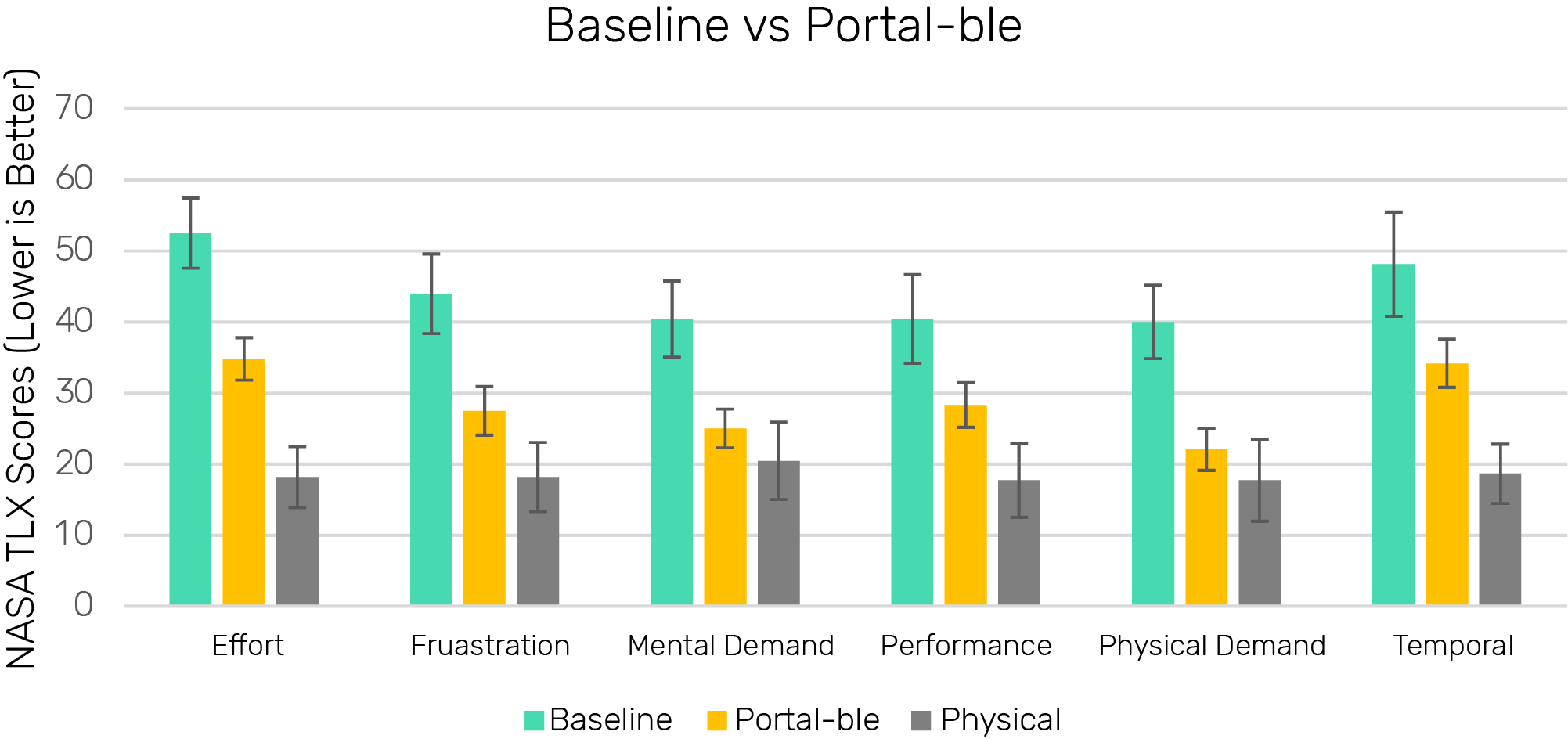

In terms of cognitive ratings, our NASA-TLX assessment result shows that participants using Portal-ble had lower mental load, experienced less frustration, and thus performed better overall. Interacting with physical objects was naturally intuitive to participants and therefore had lower scores for all the factors.

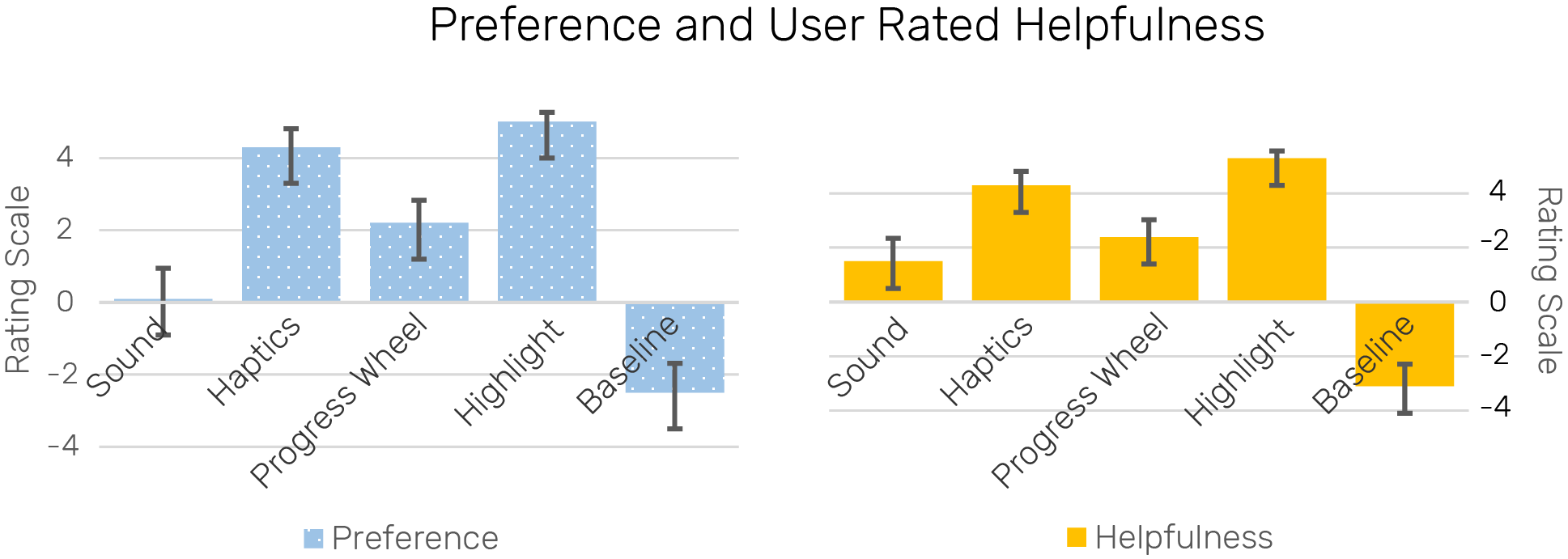

The participants overall preferred the feedback mechanisms of Portal-ble and rated them as more helpful than the baseline. Specifically, mechanisms addressing I2, haptics and highlight, are favored more than other ones. Sound turned out to be a controversial feedback, as five participants noted that it was “annoying“ and “sounded like an alarm”. P1 stated that “the changing frequency helps me knowing the object is getting closer, but it also makes me nervous.”

Overall, our data and results supported our research hypothesis: Portal-ble can indeed improve user depth perception and success in grabbing and manipulating virtual objects, and reduce user cognitive effort, especially for harder tasks (Task 1.2).

@inproceedings{Qian2019portalble, author = {Qian, Jing and Ma, Jiaju and Li, Xiangyu and Attal, Benjamin and Lai, Haoming and Tompkin, James and Hughes, John F. and Huang, Jeff}, title = {Portal-Ble: Intuitive Free-Hand Manipulation in Unbounded Smartphone-Based Augmented Reality}, year = {2019}, isbn = {9781450368162}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3332165.3347904}, doi = {10.1145/3332165.3347904}, booktitle = {Proceedings of the 32nd Annual ACM Symposium on User Interface Software and Technology}, pages = {133–145}, numpages = {13}, keywords = {smartphone, 3d hand manipulation, mid-air gesture, augmented reality}, location = {New Orleans, LA, USA}, series = {UIST '19} }